New Options for High Availability using Hyper-V with Storage Spaces Direct (S2D)

Windows Server 2016 introduces a new feature called Storage Spaces Direct. Expanding on their existing software-defined storage technology, Microsoft now allows us to pool internal disks across multiple servers–meaning that you do not need to use a separate enclosure and extra cabling to enable a shared storage solution.

Shared storage is required for building a failover cluster. We could previously use SAS-attached JBOD’s or Scale-out File Servers (SoFS) to assist with deploying cost-effective high availability solutions in the SMB. We now have even more options to choose from. Let’s quickly review two methods for providing Highly Available Virtual Machines (HAVM’s) in Windows Server 2012 and 2012 R2, and then we can compare these options to Storage Spaces Direct (S2D).

Option 1: Simple Hyper-V Failover Cluster using SAS enclosure

This is the most commonly deployed solution in my client base. The majority of my clients only need a two-node failover cluster, and this requires nothing more than two host servers with SAS interface cards, and an external enclosure for holding the disks. Simple. Even standard 1 Gb networking is sufficient to build this solution, although having 10 Gb Ethernet will make your Live Migrations much quicker.

Notice that SoFS is NOT part of this solution, since SMB loopback is not allowed (i.e. you cannot access an SoFS application share from the same host as is running the application). You just need to pool your disks, create some volumes and bring them into the cluster as CSV’s–then you’re ready to go. Summary:

- Networking: 1 Gb is sufficient

- Storage: external enclosure / cabling required

- Compute: minimum two nodes

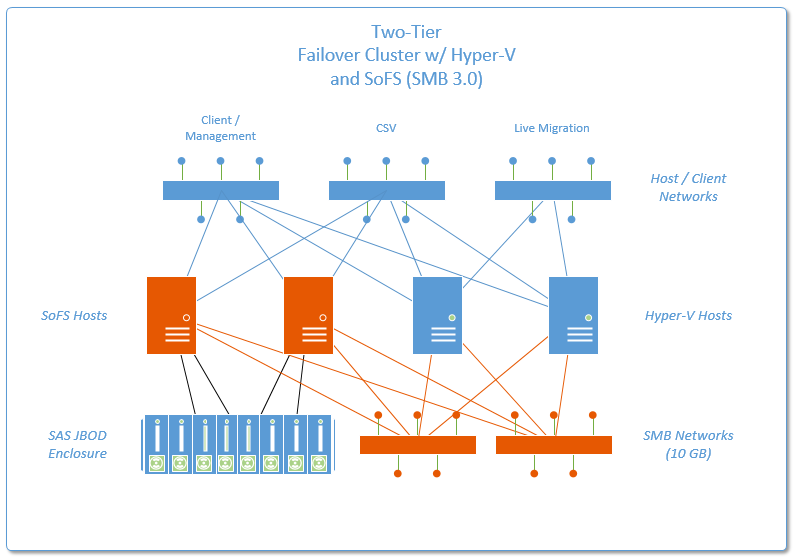

Option 2: Two-Tier Failover Cluster using SoFS + Hyper-V

In this option, you would deploy two clusters. The first would be used to present your storage via SMB 3.0 shares (SoFS), and at least 10 Gb networking is recommended for proper throughput. The second cluster is used for enabling Hyper-V. The Hyper-V cluster uses the application file shares on the SoFS cluster to store the virtual machines & virtual hard disk files. Summary:

- Networking: 10 Gb Ethernet is strongly recommended

- Storage: external enclosure / cabling required

- Compute: minimum of four nodes (2 x 2-node clusters)

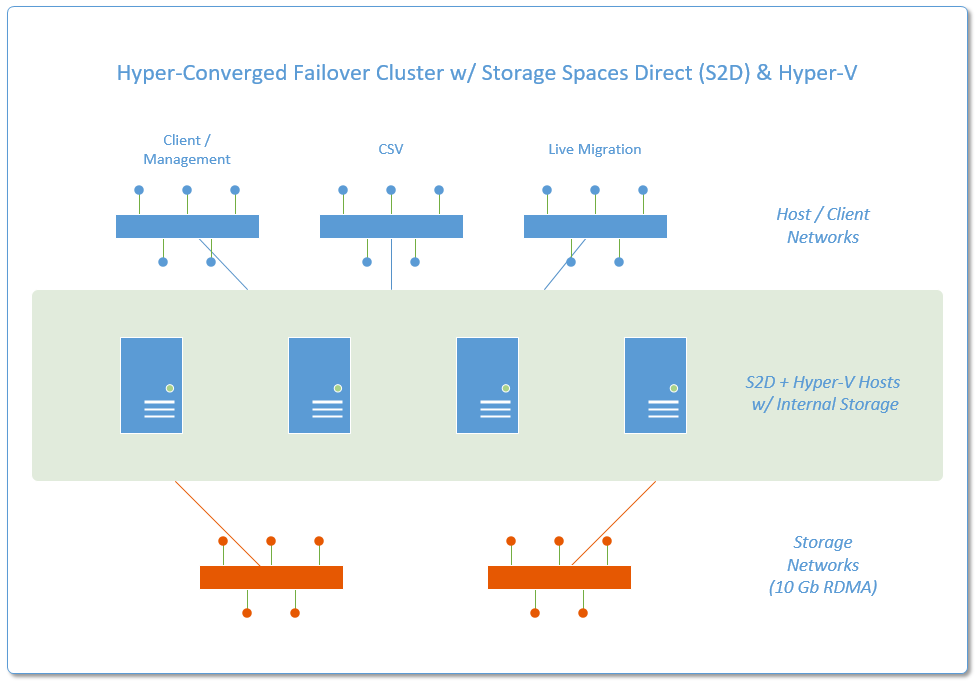

Option 3: Hyper-Converged Failover Cluster using S2D + Hyper-V

Now let’s pivot toward Windows Server 2016 and look at the new options we have using Storage Spaces Direct (S2D). The first one we will describe is a “Hyper-Converged” architecture, so called because storage and compute resources are bound within the same servers–so they scale in lock-step.

Want more storage? Add a new server with internal disks. Didn’t care about adding more compute? Oh well, you’ve got it now anyway. Maybe that sounds like a drawback, and for some businesses it certainly would be (we’ll get to that next), but for a small or mid-sized organization, it may very well be more cost effective than a multi-server + SAN solution. Summary:

- Networking: 10 Gb / RDMA required

- Storage: internal disks for all nodes

- Compute: minimum four nodes

Option 4: Two-Tier S2D Design with SoFS + Hyper-V

This solution is meant for mid-sized and larger organizations requiring a more flexible architecture (e.g. for building private clouds). In this model, storage and compute can be scaled out separately. One cluster would be for SoFS built on S2D, and the other for Hyper-V. Summary:

- Networking: 10 Gb / RDMA required

- Storage: internal disks for SoFS nodes

- Compute: minimum six nodes (4x S2D/SoFS + 2x Hyper-V)

Conclusions

When it comes to small businesses (the type I most frequently work with), the cheapest and most cost-effective option is going to remain Option 1: a simple two-node failover cluster using SAS-connected, external enclosures. That’s the “S” of SMB. As regards larger-sized small businesses or medium-sized businesses, I think that the hyper-converged solution is also pretty interesting. It sits somewhere between Option 1 and Option 2, and it does reduce at least some hardware complexities.

Love’em or hate’m, what I appreciate most about Microsoft is that there is never any shortage of options. Since the release of Windows Server 2012, they have continuously delivered more and better selections. Certainly–this makes for some confusion in the marketplace–the anxiety of analysis paralysis can easily set in. But hey–that’s how guys like me stay relevant, right? Can’t complain about that!

Leave a Reply